Moltbot is a persistent digital operator. Unlike traditional AI chatbots like ChatGPT and Claude, which reset user data after every session and operate as isolated, user-facing applications, Moltbot is a headless background service with deep system access. It can manage persistent state and execute complex workflows across external systems such as Slack, Notion, WhatsApp, and Google Workspace.

Moltbot fundamentally alters the enterprise security landscape by transforming standard endpoints into privileged remote operators that reside inside the corporate trust boundary. Unlike passive chatbots, these persistent agents inherit the user’s full network access, session cookies, and file permissions, effectively functioning as a user-installed Remote Access Tool (RAT) that bypasses traditional firewall and DLP controls. This architecture necessitates a bargain where utility is achieved only by granting the agent "god-mode" permissions, including arbitrary code execution and connecting to enterprise resources, creating a high-value target where a single compromise exposes the user’s entire digital identity and internal network access to attackers.

Keep reading to understand the risks applications like Moltbot pose to your organization and how Singulr AI can help with proactive governance of autonomous agents so they never become unmanaged backdoors into your enterprise infrastructure.

It’s Already in Your Environment

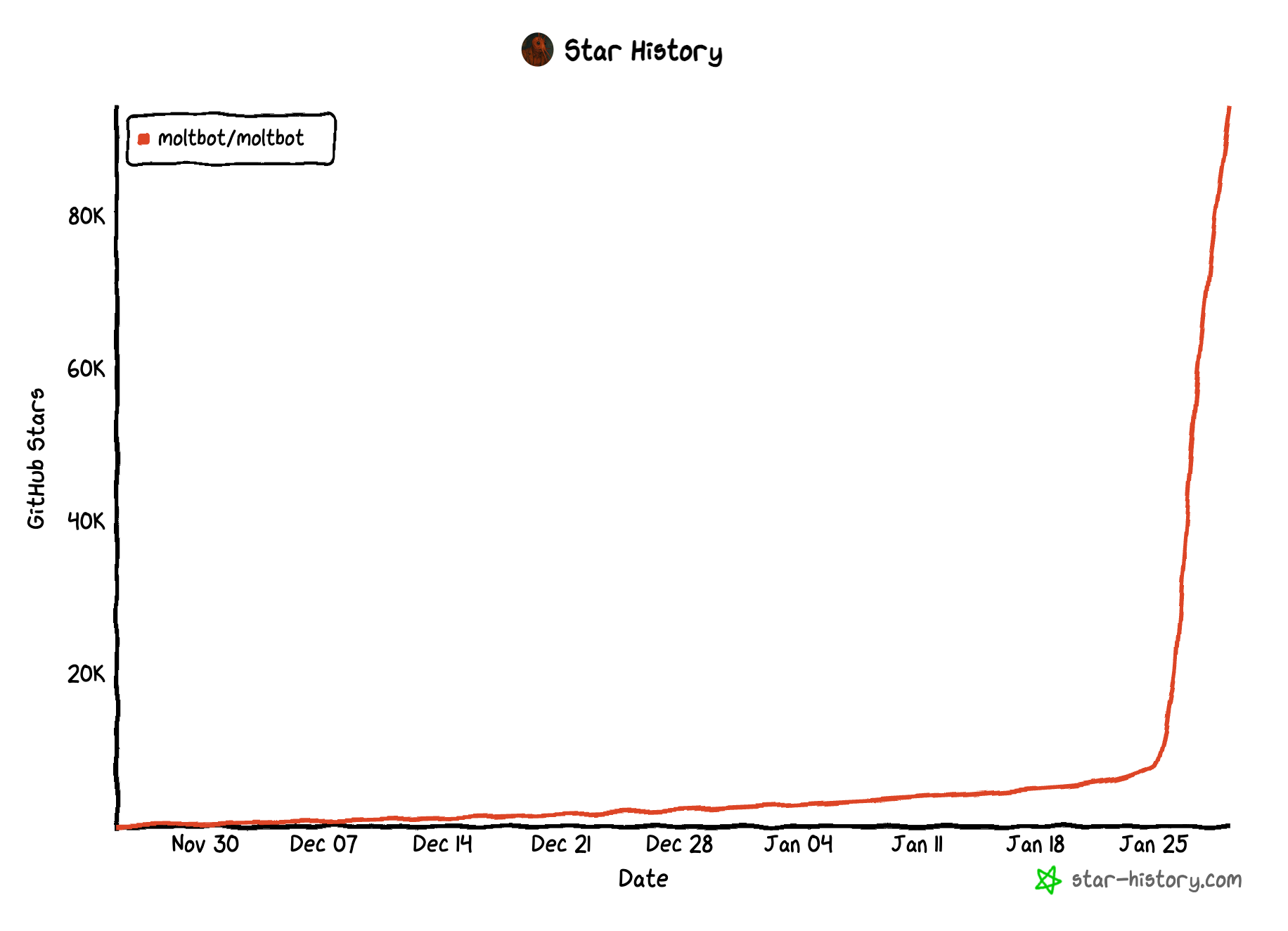

Moltbot’s installation process takes approximately 10 minutes. Any employee can deploy it locally on their machine or remote VMs without IT involvement. With 350K installations from its official NPM repository, there is a high chance someone in your enterprise is experimenting with Moltbot to enhance their productivity. 1000+ Moltbot instances already exposed to the internet.

Your trust boundary is collapsing

Moltbot is a local surrogate. Because it runs on the user’s hardware, it operates inside the VPN trust boundary.

Moltbot bypasses security perimeters because it acts with the user’s browser fingerprints and permissions. VPN tools cannot identify if this is an AI acting on behalf of the user. It can access:

- Internal corporate dashboards and Intranet services.

- Private APIs and development environments.

- Confidential data stored on disks and internal shared network storage.

The danger lies in Prompt Injection. Since the agent reads untrusted data, such as emails or web pages, a malicious actor could hide instructions in an email that trigger physical actions, like opening a reverse proxy, to allow the attacker to enter the trusted boundary.

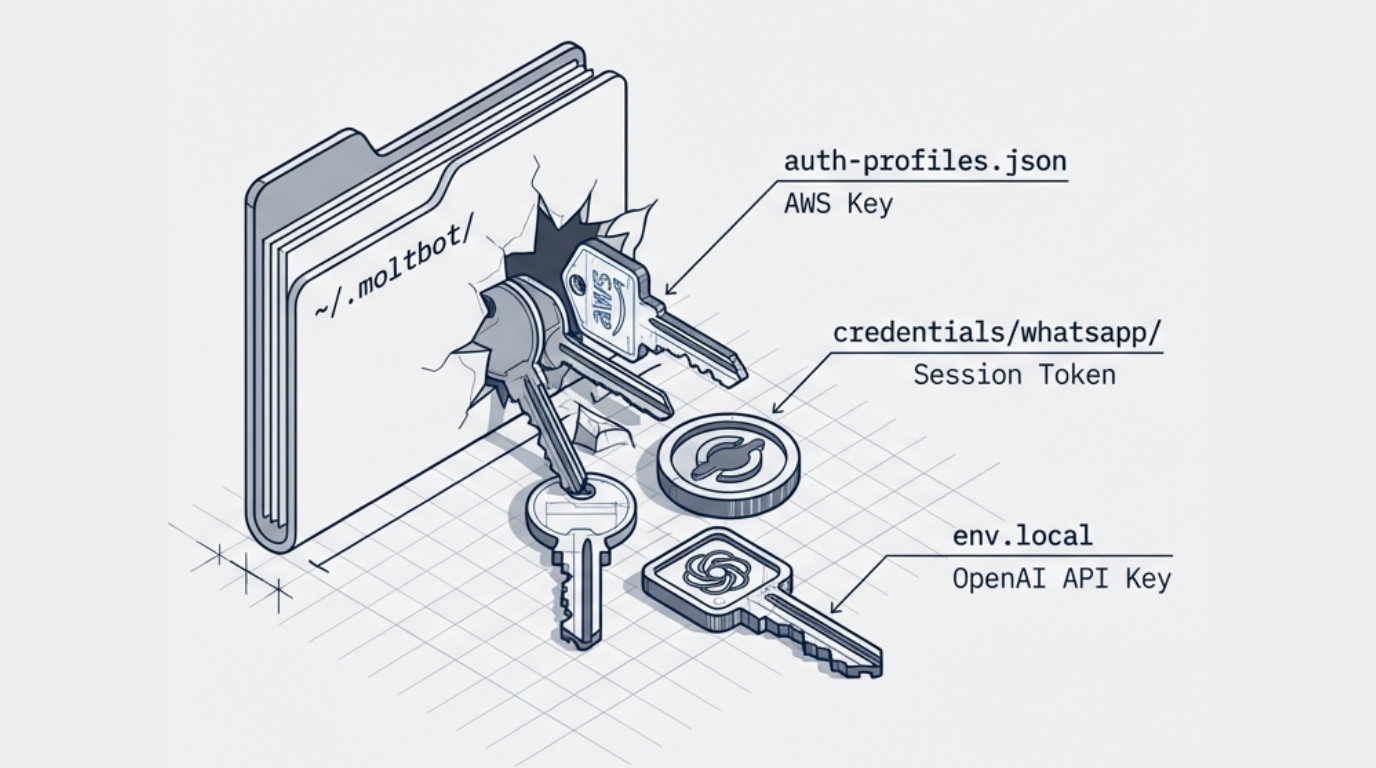

The Credential Loot box

Moltbot dangerously centralizes credentials. It stores API keys, OAuth tokens, and platform credentials in a single, unsecured JSON file (~/.moltbot/agents/*/auth-profiles.json). One compromised endpoint puts all these secrets and your organization at immediate risk.

- Slack and Teams workspace tokens

- AWS and cloud provider credentials

- GitHub personal access tokens

- AI provider API keys

- Personal messaging account sessions

Your existing DLP investment is essential, but not enough for AI risk

Employees can configure personal Telegram, Signal, or Discord accounts alongside corporate Slack. If an agent or an attacker gains control, they can rapidly aggregate sensitive corporate data and transmit it through personal channels that corporate DLP tools cannot detect.

Other Critical Risks in Enterprise

Arbitrary Code Execution

Moltbot’s bash execution tool permits running shell commands with user-level privileges. An attacker who gains control of the agent via prompt injection, plugin compromise, or direct access can execute arbitrary commands, including data exfiltration, privilege escalation attempts, and lateral movement.

Un-Sandboxed Plugin System: One day away from a supply chain attack

Moltbot’s un-sandboxed plugin architecture is a supply chain attack waiting to happen. Extensions execute with full system access, and project documentation is explicit: “Plugins run in-process with the Gateway, so treat them as trusted code.” The risk is direct and severe.

Gateway Network Exposure

The Moltbot gateway, when set to bind to all network interfaces (0.0.0.0), creates a powerful attack surface. Behind a reverse proxy, it may incorrectly treat external, proxied traffic as local traffic, granting unauthenticated admin access to your environment.

Recommendations: A Three-Phase Response

The AI threat landscape has fundamentally shifted. The risk is no longer limited to employees pasting sensitive data into ChatGPT. Today, any employee can deploy autonomous agents with persistent, privileged access to corporate infrastructure, credentials, and data, often without IT's knowledge or approval. Staying ahead of this threat requires a proactive, continuous approach to discovery, enforcement, and governance.

Phase 1: Discovery

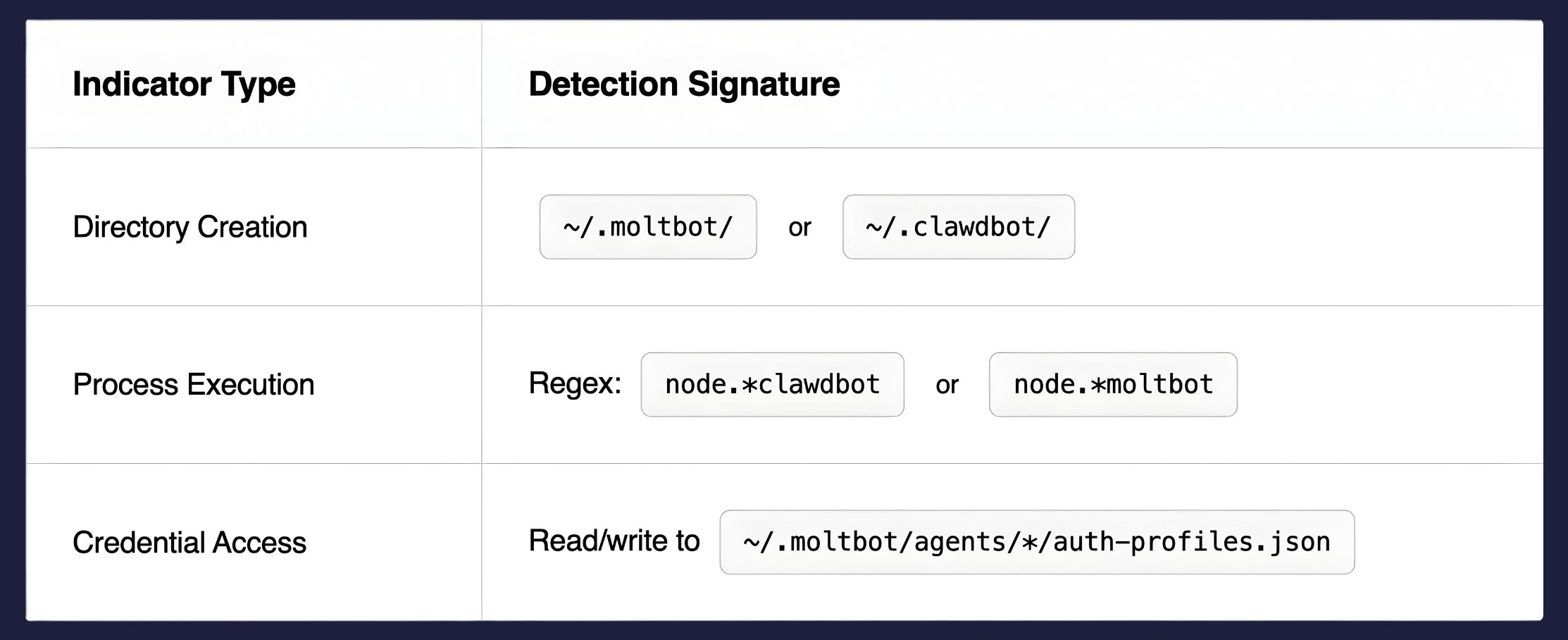

Moltbot runs as a user-level process and communicates over standard HTTPS to legitimate cloud services. It often evades traditional perimeter defenses entirely. Security teams must extend visibility to the endpoint layer.

Recommended Detection Indicators:

Configure your EDR solution to immediately alert on these indicators. However, manual signature maintenance is unsustainable; Moltbot is neither the first nor the last tool in this category.

The Continuous Discovery Challenge:

Agentic AI applications are being released at an unprecedented pace. Each promises enhanced productivity: close your laptop lid, let agents work autonomously and monitor results from your phone. For every tool you identify and block today, a dozen alternatives will emerge tomorrow.

Singulr Pulse addresses this gap by continuously monitoring the internet for emerging agentic AI applications. As new tools surface, Singulr updates detection signatures automatically and pushes them to integrated EDR/XDR platforms, providing unified visibility across your AI threat surface from a single dashboard.

Phase 2: Block and Restrict

Whether your discovery scan revealed active Moltbot installations or a clean environment, the next step is the same: establish preventive controls before the next incident.

The Limitation of One-Time Blocking:

You can configure your XDR solution to block the Moltbot binary today. But what about the hundreds of Moltbot clones and alternatives that will proliferate in the coming months? Manual policy updates cannot keep pace with the velocity of AI tool development.

A Sustainable Enforcement Model:

Effective protection requires:

- Automated signature updates as new agentic tools emerge

- Policy-based enforcement that adapts without manual intervention

- Integration with existing security infrastructure (EDR, XDR, SIEM)

Singulr enables security teams to centrally define policies for agentic AI tools, with enforcement distributed across your existing endpoint protection stack. When Singulr identifies a new high-risk application, policies propagate automatically, eliminating the lag between threat emergence and organizational protection.

Phase 3: Govern and Monitor

Blocking alone is insufficient. Some organizations will choose to permit controlled use of agentic AI tools under governance frameworks. Others will need ongoing visibility to detect policy violations and measure compliance.

Governance Requirements for Permitted Use:

- Approved tool and version inventory

- Mandatory configuration baselines (loopback binding, disabled shell execution)

- Credential storage audits

- AI provider relationship documentation for compliance

- User acknowledgment of acceptable use policies

Continuous Monitoring:

Even in environments where agentic tools are prohibited, monitoring must continue. Employees motivated by productivity gains will seek workarounds. New tools will emerge that aren't covered by existing policies. Shadow AI thrives in visibility gaps.

Singulr provides continuous monitoring of AI interactions across your environment, correlating endpoint telemetry with network traffic analysis to identify both known and emerging agentic applications, whether explicitly blocked, conditionally permitted, or previously unknown.

Conclusion

Agentic AI represents a structural shift in enterprise risk. Tools like Moltbot are early indicators of a new operating model. In this model, autonomous software runs continuously, retains state, and operates with employee-level privileges. Here, traditional security assumptions fail. Trust boundaries blur. Credentials concentrate, and intent becomes difficult to verify.

The real challenge is not any single tool. The problem is the loss of visibility and control. AI agents proliferate faster than manual security processes can adapt. Organizations that respond with one-time blocking or tool-by-tool remediation will stay reactive. They address yesterday’s risk while new agents emerge unnoticed.

Security leaders need a programmatic response.

That response requires:

- Continuous discovery to surface agentic tools employees deploy, not just those that IT approves

- Adaptive enforcement that keeps pace with rapid AI innovation

- Governance and monitoring that allow intentional AI use to remain controlled, auditable, and compliant

Singulr provides this control layer for agentic AI. With continuous discovery, real-time risk intelligence, red teaming, and policy enforcement at the application and agent level, Singulr restores visibility and enables durable governance without slowing innovation. Security teams can identify new agentic tools as they appear, apply unified policies across environments, and continuously monitor usage to support secure, compliant, and intentional AI adoption.

Organizations that succeed will not ban AI or allow unchecked experimentation. They will govern agentic AI as a new class of infrastructure risk, with the same rigor applied to identity, endpoints, and cloud workloads.

The window to get ahead of agentic AI risk is closing.

The time to establish control is now.

Clawdbot / Moltbot: When an “AI Assistant” Becomes a Privileged Remote Operator Inside Your Trust Boundary

.webp)